“The bad implementation of a great idea will always become a great example of a really bad idea!” – Futurist Jim Carroll

A few years ago, during the Q&A section of a presentation I gave in Sao Paoo, Brazil, I was asked by the moderator what I thought were the biggest issues faced by organizations going forward. I indicated that it was probably the lack of respect, diligence, and care around issues of computer and network security and that organizations would end up paying a big price for their negligence in what we might call “technology governance.”

What’s that? Governance? It’s the framework that an organization puts in place to guard against future issues, risks, and challenges. I remember saying on stage that what we were currently witnessing with the arrival of many ‘smart devices’ was the bad implementation of a great idea – since much of the technology out there was suffering from security flaws and other challenges. Organizations that were bringing them to market didn’t pay enough attention to the issue of ‘governance’ – they didn’t have an architecture in place that would guard against such flaws – with the result that a lot of problems were happening.

I was thinking about this yesterday while I was knee-deep in the research I’m doing to prepare for a talk to a group of 60 CEOs for an upcoming event – the topic, of course, being AI. My research was confirming my core belief that going forward, in their rush to implement new AI opportunities, many companies are going to fail with security, privacy, and other issues – and so we will once again see a new flood of bad implementations of a great idea.

Consider our past – and the Internet of Things, or IoT as we call it. It’s a great idea – as the Internet made its way into homes, there emerged big opportunities for smart devices. Webcam alarm systems, smart doorbells, smart thermostats, and more. The concept is fantastic – hyperconnectivity allows the reinvention of previously unconnected technologies into something new. And so we saw the emergence of the Nest thermostat, the Ring doorbell, and other technologies.

Yet, we saw a really bad implementation of this great idea. We saw a flood of products that were not well architected and did not have a robust, secure architecture, and this led to all kinds of problems – essentially, smart home devices that were not secure and could be easily hacked. Think about it – the last few years have seen smart locks that can be easily bypassed; smart thermostats that can be remotely controlled; and even smart refrigerators that can be used to send spam emails.

I wrote about this a few years back in a long blog post, commenting:

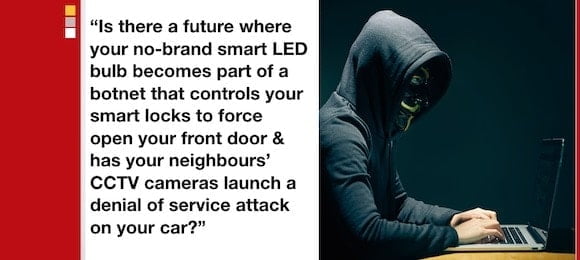

Here’s the thing: most #IOT (Internet of Things) projects today are a complete failure – they are insecure, built on old outdated Linux stacks with mega-security holes, and ill-thought out architectures. My slide on that fact? Simple: a reality which is already happening today. This type of negligence will doom of the future of the products of many of the early pioneers.

Trend: IOT? It’s Early Days Yet, and Most Products So Far are a Big Failure!

The post, “Trend: IOT? It’s Early Days Yet, and Most Products So Far Are a Big Failure” went to the root of the problem in this slide:

How bad an implementation of a great idea occurred? Things were so bad there a popular social media account, InternetofS***, spends all of its time documenting the security and privacy failures inherent to many smart devices.

In my post, I went further, suggesting that what was needed was an ‘architecture’ – or a governance framework for device design and implementation – that would guard against the emergence of such risks. The architecture would ensure that any product design paid due respect to a set of core principles – what I call the “11 Rules of IoT Architecture.”

So here’s the thing: if organizations are going to build a proper path into the hyperconnected future, they need to understand and follow my “11 Rules of #IoT Architecture.” Read it, print it, learn from it : this is what you need to do as you transition to becoming a tech company. Some of the biggest organizations in the world have had me in for this detailed insight – maybe you should.

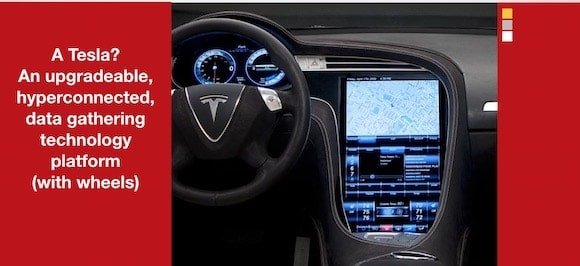

My inspiration for how to build the future right comes from Apple’s robust Device Enrolment Program architecture, which lets an organization deploy, manage, upgrade and oversee thousands of corporate iPhones or iPads; and Tesla, which is not really a car company, but a hi-tech company.

And so in both of these talks, I put into perspective how Tesla has (without knowing it, LOL) been following my rules. First, think about what a Tesla really is – here’s my slide….

Going back to my list of the 11 Rules of IOT Architecture, you can see that Tesla has met a number of the conditions …

This architecture provides a framework – governance framework – that guards against and mitigates inherent risk. Over time, many organizations began to think about this type of thing; over time, organizations realized they could not rush to market great ideas with bad design.

Which brings me back to AI. Many organizations are rushing to get involved with this shiny new toy – either integrating it into their software platform or implementing fancy new AI tools. But the risks in doing so are real – private, internal corporate documents and information might be used for a ChatGPT prompt and over time, become exposed; private large language model systems might be implemented using the same documents, and a future security or privacy leak might expose those same documents to the public. Other risks abound. At least some people are worried about this:

Ahead of a Salesforce event focused on AI, the company is unveiling security standards for its technology, including preventing large language models from being trained on customer data. “Every client we talk to, this has been their biggest concern,” said Adam Caplan, senior vice president of AI, of the potential for confidential information to leak through the use of these models.”

Salesforce touts AI strategy, doubles investment in startups,

Bloomberg, 19 June 2023

Everything we have done wrong in the past might be repeated in the future if we don’t pay attention to the issue – if we don’t put in place a structure for AI governance.

Because the bad implementation of a great idea is just a great example of a really bad idea!

GET IN TOUCH

Jim's Facebook page

You'll find Jim's latest videos on Youtube

Mastodon. What's on Jim's mind? Check his feed!

LinkedIn - reach out to Jim for a professional connection!

Flickr! Get inspired! A massive archive of all of Jim's daily inspirational quotes!

Instagram - the home for Jim's motivational mind!