I can – when the World Wide Web emerged in early 1993, and corporate groups. associations and organizations were scrambling to establish a presence and figure out a strategic purpose. It almost seems like it’s 1993 all over again!

As a futurist. I’ve been writing and speaking about A.I. for quite some time, but I too will admit that the speed of the arrival of these new tools caught me by suppose – as well as the level of interest and adoption.

There can’t be a bigger story in tech this year. Suffice it to say, in 2023, you are already finding that you going to be OVERWHELMED by all things AI-related.

The key issue for you is: What are you going to do about it?

You are probably already running this question through your mind. As an association executive, how will ensure your members are up to date with the implications, both good and bad, as the technology begins to impact your industry? How do you provide education and knowledge to your membership base, knowing that they are probably already feeling overwhelmed by the speed of what is occurring at the same time they are enamored of what it represents? How should your association come with a cogent and responsible industry position with respect to the deep ethical, copyright, and risk issues that it represents?

As a corporate group, how should you approach the AI issue from a strategic perspective, and not chase it with strategic abandon driven by what I call ‘shiny object syndrome?’ How do you invest in it wisely, in a way that is aligned with your strategic goals, not something that you throw money at with wild abandon? How do you do a deep dive into the disruptive impact that ti represents on your business model while ensuring at the same time that you develop and enhance your expertise with it?

These are the questions I am already seeing appear with inquiries into my speaking activities, which is why I’ve recently begun to highlight the topic with my offerings to clients: “The Acceleration of Artificial Intelligence and the Rise of the Robots – Promise and Peril in the Next Technological Transformation.” I expect to be spending a LOT of time on stage with this topic in 2023 into 2024 and beyond.

With that in mind, let’s give you a brief overview of SOME of the things you should be thinking about!

The Sudden Velocity of AI

Obviously, AI isn’t new – the idea has been with us forever, and the technology in all its shapes and forms has been advancing bit by exponentially bit each year. And then suddenly, it exploded in the public consciousness with ChatGPT!

Indeed, the advances in artificial intelligence this year have been staggering and impressive. While anyone who tracks the future has always kept an eye on the slow and steady advance of AI technologies, the sudden acceleration this year fits the main theme of how we track the future – in that ‘the future happens slowly and then, all at once.’

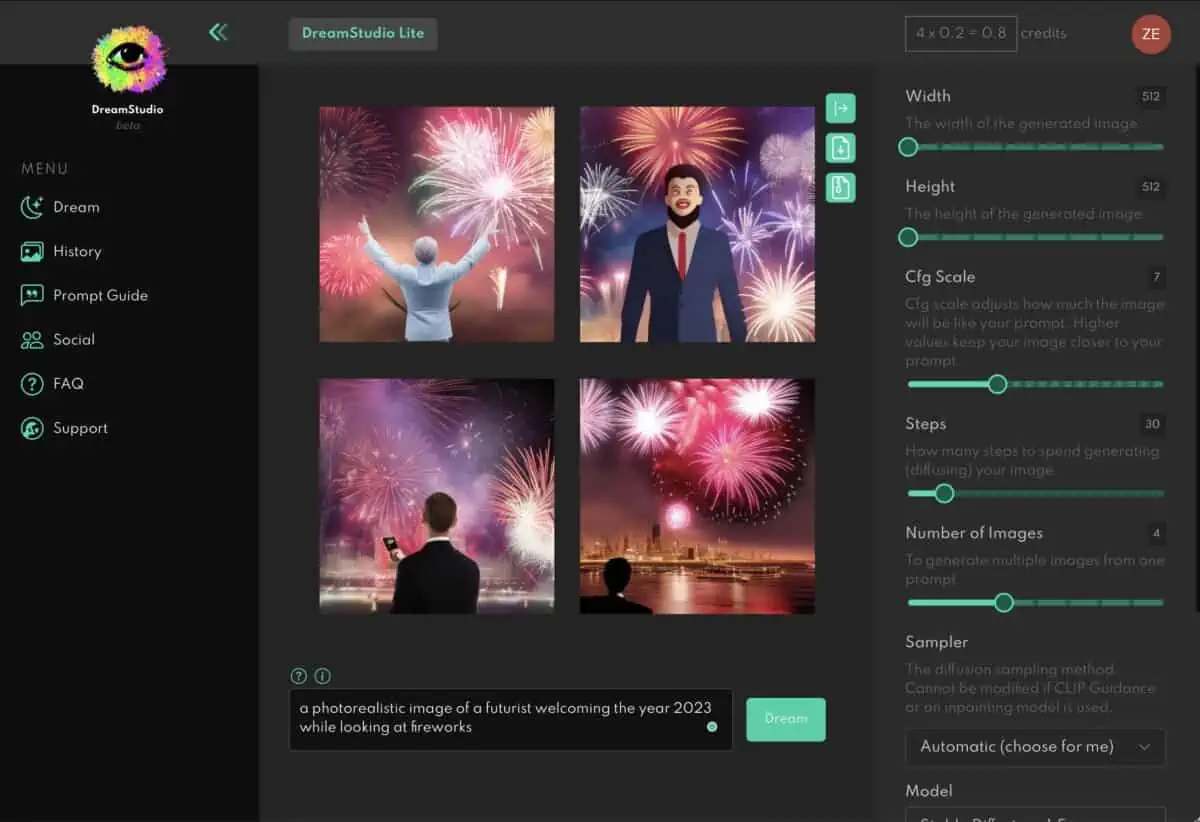

AI happened slowly, and then this year, all at once! And so we end up with a year in which we can ask a computer to draw us a picture of a futurist watching fireworks while welcoming in 2023.

Or we can ask a different computer to tell us why AI is the most important tech story of the year, and it offers up a fairly plausible yet somewhat modest paragraph:

And we can top it off with some interesting AI-generated selfies guaranteed to drive our family crazy!

The implications of AI, both good and bad, have long been known.

And yet, this year, the rapid advances seen with these two technologies have made crystal clear the upside and downsides of A.I. Artists and creative industries are hugely impacted by the arrival of complex new creative engines; teachers and professors will be challenged with students submitting AI-generated term papers and reports; the dumbing down of society will accelerate with massive new text and image generating engines that will further blur the boundary between reality and fantasy. Much of this might not end well, and I’ve been stressing that we as a society don’t know how to deal with it at all.

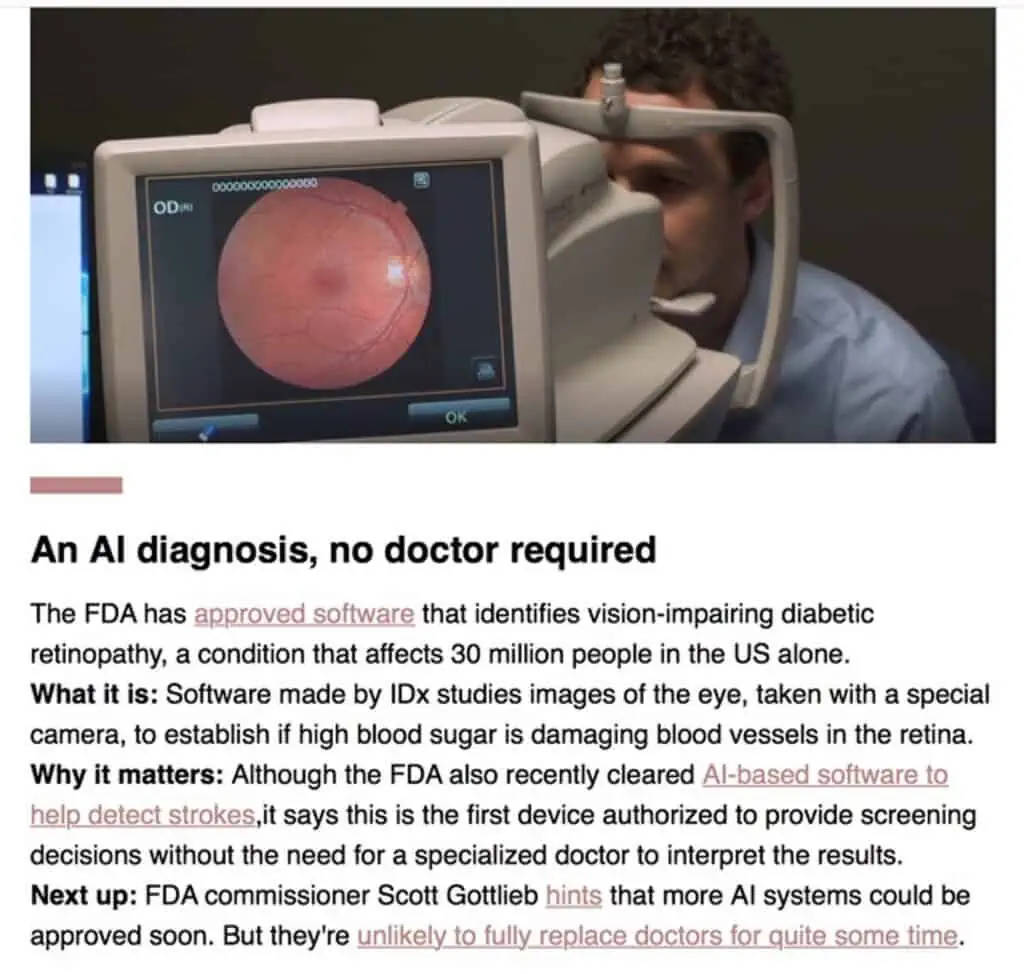

And yet, at the same time, the opportunities are impressive and far-reaching. AI has and will play a continuously growing role in medical diagnosis and research; the world of construction and manufacturing will accelerate through the continuous deployment of intelligent, connected AI-driven smart robotic technology; the very nature of scientific research will be forever changed.

The Sudden Acceleration of AI

So why was 2022 the year that it suddenly went ‘supernova?’

One thing is that it has always been here for a time, but has been happening quietly, behind the scenes in medicine, pharma, and other science-heavy fields. Most people didn’t notice it until the sudden arrival; of Stable Diffusion and ChatGPT brought it to the forefront and made it real.

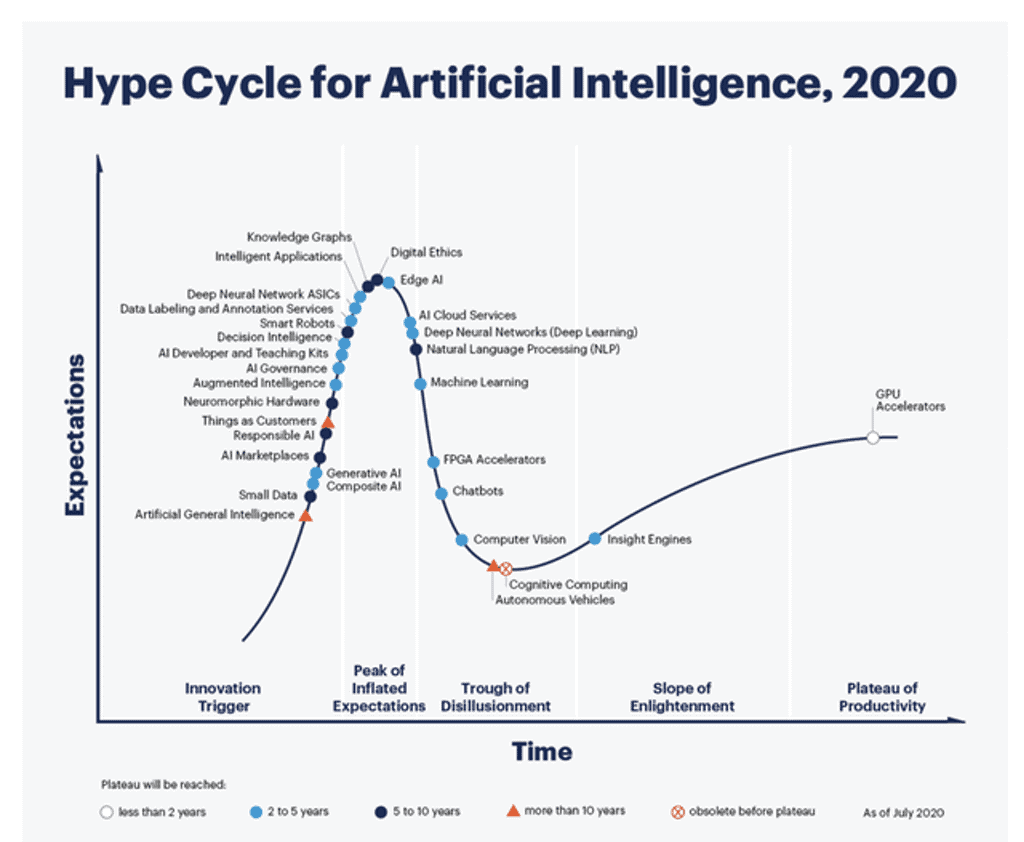

Consider the hype-cycle stats for all the many different aspects of AI:

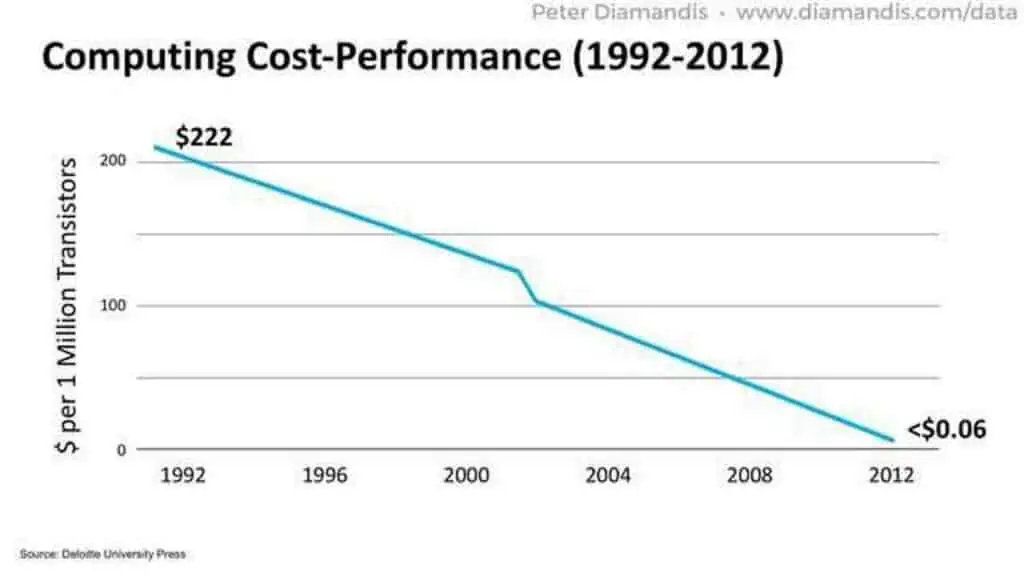

Various aspects have been moving steadily along the curve, yet accelerating simply because the cost to ‘do’ AI continues to collapse.

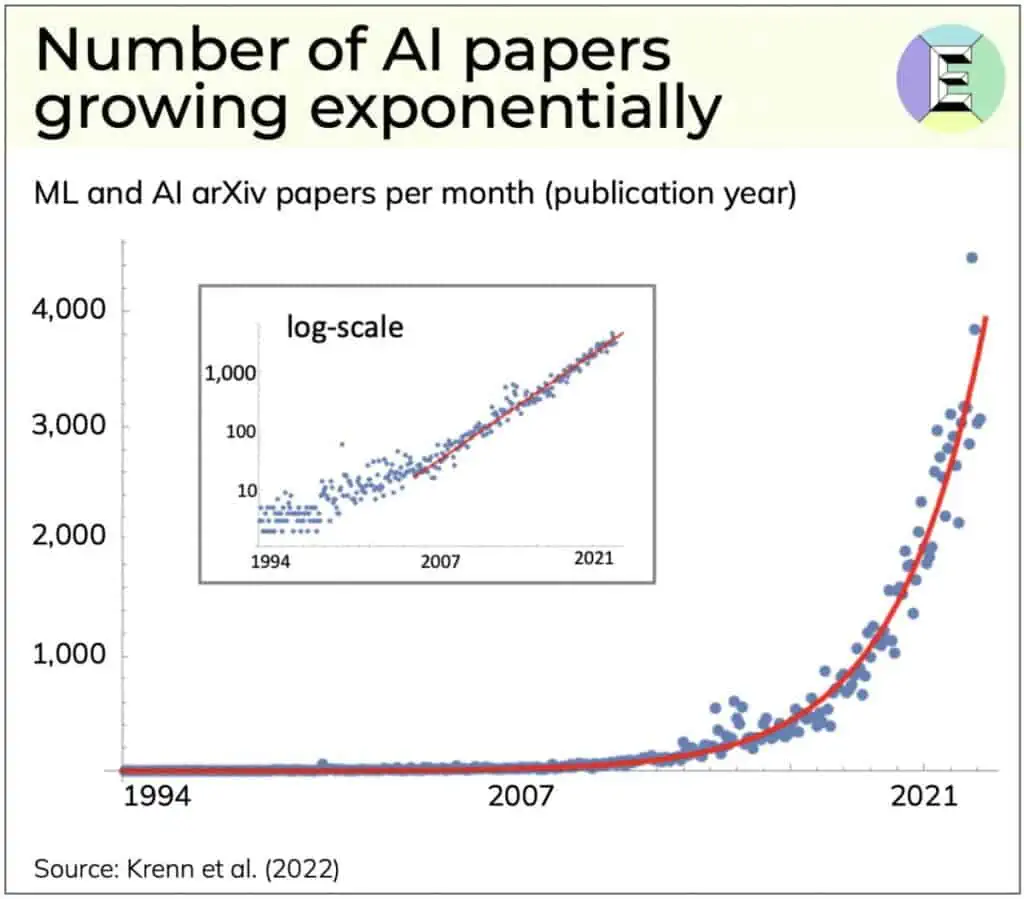

…and the amount of research into AI simply explodes.

It makes sense that is suddenly and rapidly ‘arriving.’

The big job is that the world now has to figure out what to do with ‘it,’ Suffice it to say, I think the key trend for 2023 is that we will have to pay far more attention to what it represents in terms of promise and peril!

Science opportunities

Getting beyond the excitement of ChatGPT for a moment, consider where AI might take industries in the future.

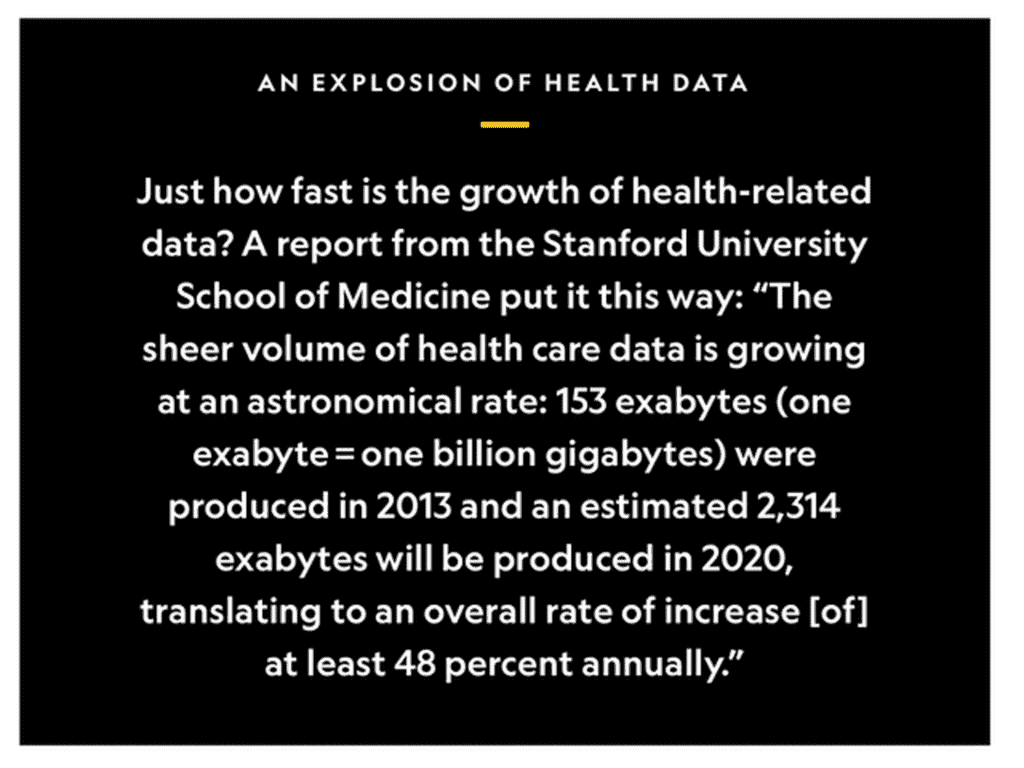

AI is certainly not new in the world of healthcare – we have long been struggling with an explosion in health data.

AI has, for quite some time, allowed us to manage the explosion of medical device technology, exponentiating knowledge and more — through fascinating devices and concepts.

The fact is, we are going to continue to see a massive acceleration of science-based AI opportunities.

The same type of massive opportunities is occurring in the world of construction and manufacturing, agriculture and farming, automotive/trucking, and logistics. Everywhere we turn, there are remarkable opportunities emerging to do remarkable things with a remarkable, fast-evolving technology

And yet….

Might corporate implementations go wrong?

It’s the world of corporate implementations that presets so many intriguing opportunities- and the potential for promise and peril!

Two examples suffice.

One I saw online.

And one I did on my own:

FOMO, hype, startups, new ideas – I expect the corporate world to be flooded with ideas around the ‘next big thing’ in 2023 and beyond. History has taught us there will be massive breakthroughs, fascinating applications, and wonderful failures.

The Downside

There is also no doubt that this takes us into a long-predicted complex and scary world:

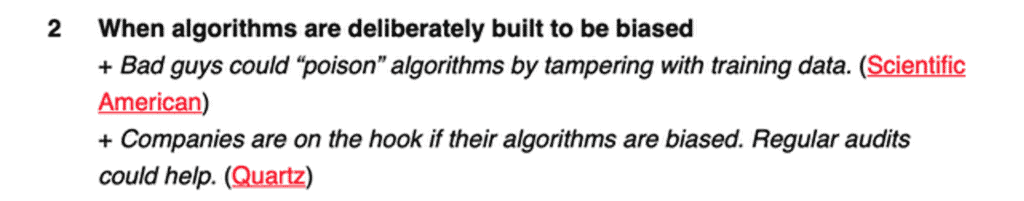

Algorithm bias:

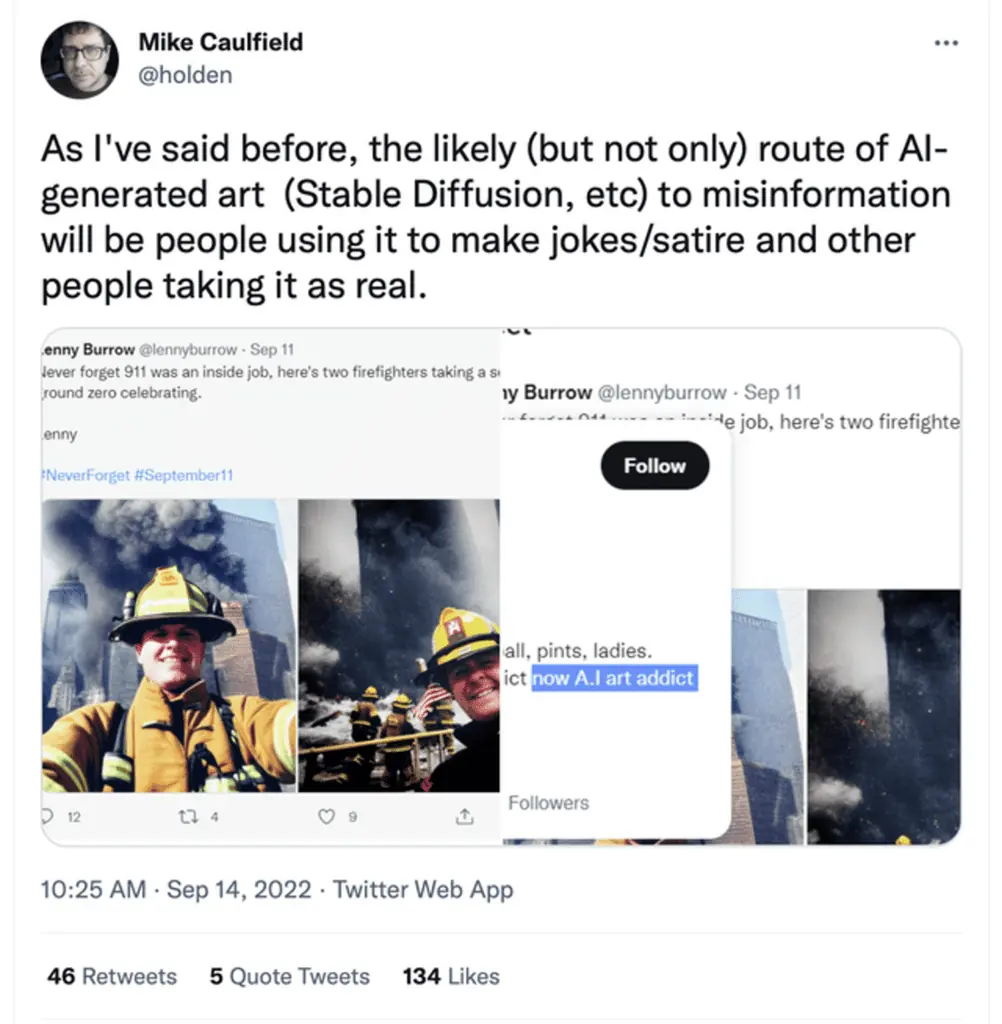

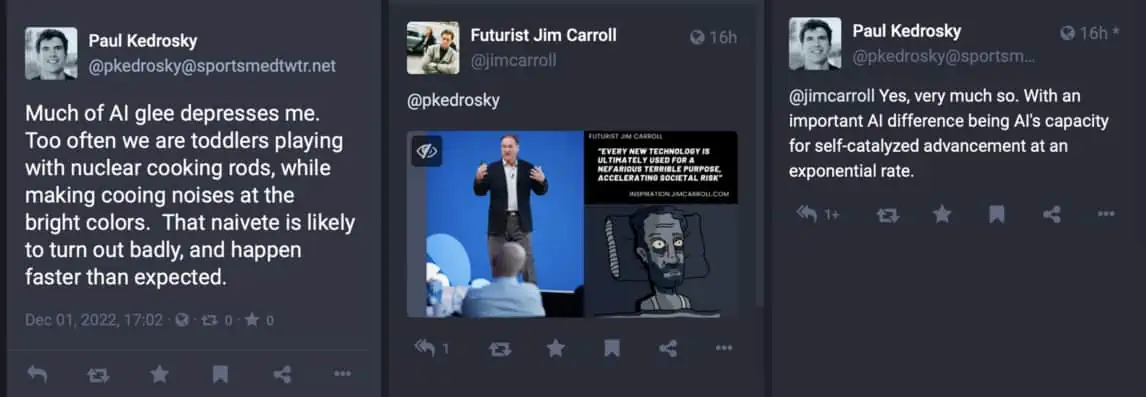

Today, we can barely manage the flood of false information generated by humans – how are we ever going to deal with it when it is generated at scale? I, like many others, have been watching with increasing alarm the sudden and fast arrival of all these new A.I. technologies. It’s everywhere – and every tech company is rushing to get involved. The result will be a massive rush to push products out the door, with little regard given to safety, ethics, and the potential for destructive misuse.

Over on Mastodon, @jacqueline (whoever she might be) stated the situation perfectly:

“society: damn misinfo at scale is getting a bit out of hand lately. seems like a problem.

tech guys: i have invented a machine that generates misinformation. is that helpful?“

People are worried – rightfully so – as to how information networks like Facebook, TikTok, Twitter, and others have been weaponized by various factions on the left and the right; by political parties and politicians; by sophisticated public relations campaigns and companies. Information is coming at us so fast and so furious that many people have lost the simple ability to judge what is real and what’s not.

And the rush to capitalize on the newest iterations of AI technology – barely months old in terms of use – is already seeing some awful results.

When Arena Group, the publisher of Sports Illustrated and multiple other magazines, announced—less than a week ago—that it would lean into artificial intelligence to help spawn articles and story ideas, its chief executive promised that it planned to use generative power only for good.

Then, in a wild twist, an AI-generated article it published less than 24 hours later turned out to be riddled with errors.

The article in question, published in Arena Group’s Men’s Journal under the dubious byline of “Men’s Fitness Editors,” purported to tell readers “What All Men Should Know About Low Testosterone.” Its opening paragraph breathlessly added that the article had been “reviewed and fact-checked” by a presumably flesh-and-blood editorial team. But on Thursday, a real fact-check on the piece came courtesy of Futurism, the science and tech outlet known for recently catching CNET with its AI-generated pants down just a few weeks ago.

The outlet unleashed Bradley Anawalt, the University of Washington Medical Center’s chief of medicine, on the 700-word article, with the good doctor digging up at least 18 “inaccuracies and falsehoods.” The story contained “just enough proximity to the scientific evidence and literature to have the ring of truth,” Anawalt added, “but there are many false and misleading notes.”

And there we have it. That’s our future.

Early Days Yet – and Massive Risk

The thing with a lot of this ‘artificial intelligence’ stuff is that it’s not. These are predictive language models, trained to construct sophisticated sentences based on massive data sets. Those data sets can be manipulated, changing the predictive outcome of the model. Many of these early releases have had small ethical efforts to ensure the A.I. data set does not include hate speech, racism, and other such things. That probably won’t last long; A.I. will be weaponized before we know it. The result? A.I. language tools will soon be able to generate a ridiculous amount of harmful content that will flood our world.

But it’s not just that – it’s the fact that the old truism GIGO applies – garbage in, garbage out. We are already seeing the lack of guardrails as companies rush to cash in. The Men’s Journal situation is but one small example. There are dozens, hundreds, soon to be thousands, millions. A.I. is soon to become an engine of misinformation, a factory of falsehoods, and a deployer of dishonesty.

My good friend Paul Kedrosky, an early-stage capital investor, sums it up nicely:

The Hysteria

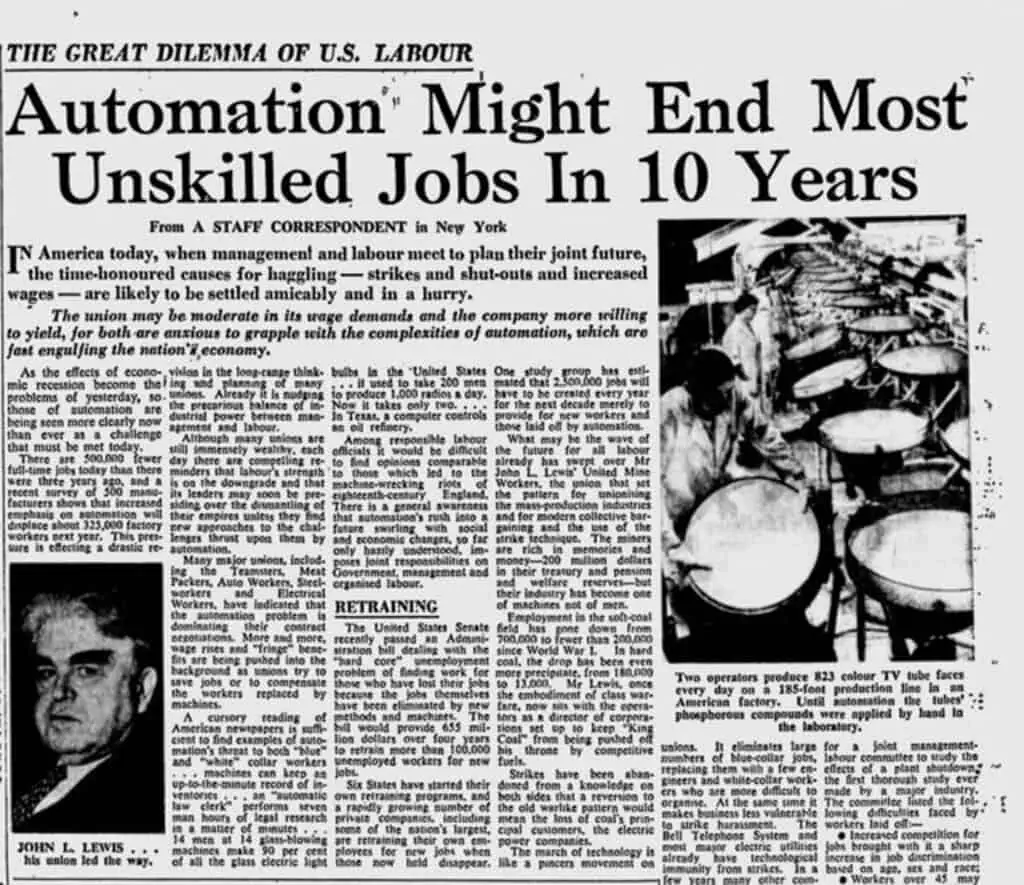

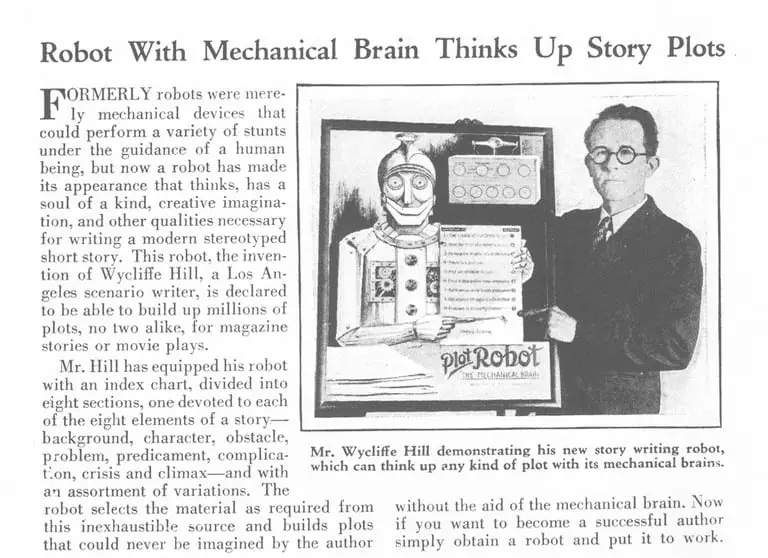

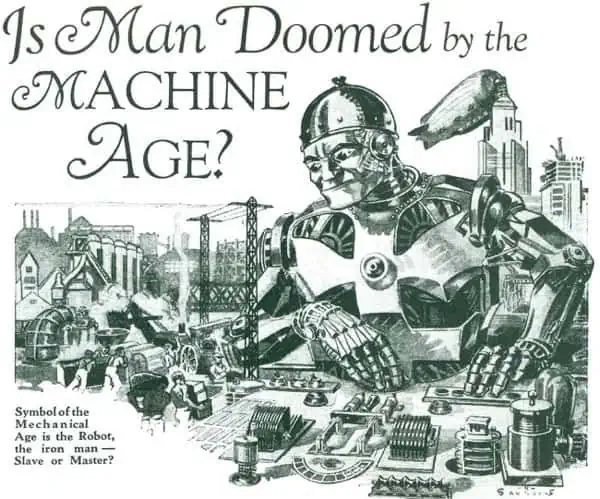

And with the new focus on this old technology comes the hysteria of yesteryear:

and the years before:

and before:

What does it all mean?

Both good and bad!

This conundrum of good and bad is nothing new – and has been with us as long as there has been a future. I wrote this in 1997 in my book Surviving the Information Age:

Not only have we grown up with skepticism about technology and computers due to failed forecasts, but we have developed a sense of suspicion and fear about its impact.

It’s clear that we had a sense of wonder in the 1950s and 1960s when it came to technology, but soon we began to encounter the darker side of what technology could do when we were introduced to HAL, the computer in the movie 2001: A Space Odyssey. Here at last, in the midst of the uproar and confusion that surrounded us in the 1960s, we had the perfect understanding of what a computer could be. It would be a faithful tool, with a level of intelligence on par with humans, if not exceeding them. It could speak, think and play a mean game of chess. The voice of HAL was soothing, relaxing and about as conversant as any other person.

The “computer as a life form” image was complete! HAL didn’t seem like a machine; rather, “he” was a partner on the voyage, strong, knowing and ever-present. So while watching the movie, we were at first reassured and fascinated. The future role of the computer in our lives could be quite positive, after all! Or so we thought.

As 2001: A Space Odyssey progressed, we became aware of an evil side to HAL; at the same time that “he” was smart, he was evil, to the extent that he was capable of committing the ultimate act of murder. We were stunned! Computers might not be simple electronic brains, about to deliver us into a world of the shortened work week! Instead, they could be evil, nasty devices, technology gone amuck!

Looking back, we can see that the duplicity of HAL heralded a new era, one that involved a changing attitude towards technology. It wasn’t just HAL but many other things, perhaps most importantly, what we witnessed with the horror of technology gone mad in the Vietnam war. We became skeptical of the wonders of technology in general.

Ralph Nader entered our consciousness, with his indications that something was wrong with the technology of the world. And over time, the future held for us not the exciting glow of wonderful technology but of nuclear plant meltdowns at Chernobyl and Three Mile Island. We saw the Apollo 1 mission burn on the pad with the loss of three lives and sat in terrorized silence when Challenger exploded in the sky.

We became skeptical of the benefits of all of this new technology and began to challenge the views of the scientists who pronounced it to be good.

There is a lesson to be learned from all of this: our attitude towards technology will always be one of enthusiasm balanced by skepticism.

As they say, what is old is new again. Welcome to the future!

That’s our future right there!

The sudden massive velocity of AI?

Stay tuned and buckle in!

GET IN TOUCH

Jim's Facebook page

You'll find Jim's latest videos on Youtube

Mastodon. What's on Jim's mind? Check his feed!

LinkedIn - reach out to Jim for a professional connection!

Flickr! Get inspired! A massive archive of all of Jim's daily inspirational quotes!

Instagram - the home for Jim's motivational mind!